Fundamentals of Sound |

|

Fundamentals of Sound |

|

|

Perceptual attributes of acoustic waves |

|

SOUND SOURCE AUDITORY LOCALIZATION |

| Introduction / Definitions |

| Auditory Localization Cues |

| Interaural Intensity/Level Differences (IID / ILD) & Interaural Time/Phase Differences (ITD / IPD) |

| Monaural & Interaural Spectral Differences - HRTFs / ATFs |

| Binaural Cues & "Release from Masking" |

| "Cone of Confusion" - Head-Movement Localization Cues |

| Judging Sound Source Distance |

| The Precedence Effect |

| Optional Section: Sound source localization neural mechanisms |

Sound-Source Auditory Localization

Introduction / Definitions

|

The term auditory localization describes judgments on the location (orientation & distance), movement, and size of a sound source, based solely on auditory cues.

Terminology | |

|

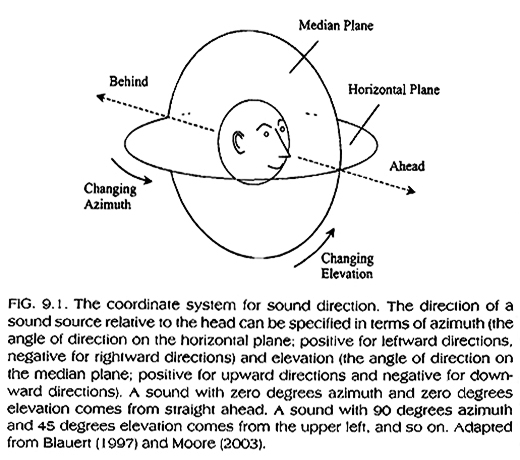

Auditory localization judgments are mainly described in terms of the apparent position of a sound source relative to the listener's head (see to the right).

Lateralization describes judgments on the

apparent position of a sound source when listening through stereo

headphones, which is limited within a listener's head.

|

(in Plack, 2005: 174) |

|

The JND of auditory localization is defined in terms of minimum audible angles (MAAs) of rotation in azimuth, elevation, or both, necessary to convey a corresponding change in sound-source positioning relative to the head (see below and to the right) MAAs depend on: The smallest possible MAA is ~ 10

(Source: Freigang et al., 2014) |

|

Interaural Intensity/Level Differences (IID / ILD)

&

Interaural Time/Phase Differences (ITD / IPD)

| |

|

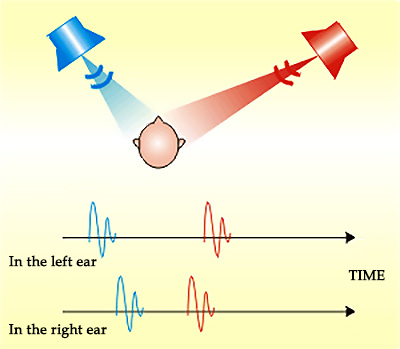

Interaural differences in intensity and arrival time (phase) constitute the most important sound-source localization cues.

| |

|

| |

|

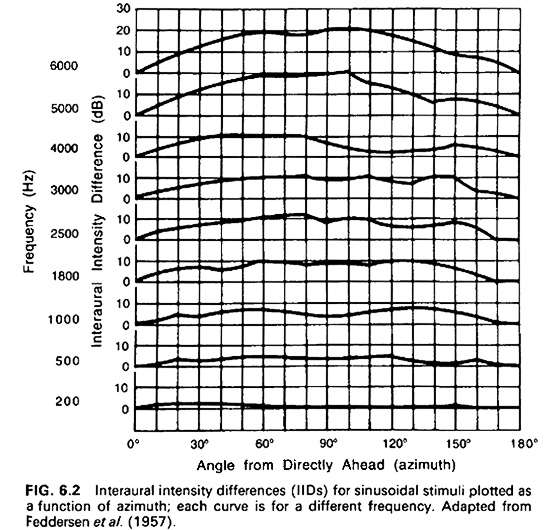

For high frequency sound signals (>~1500Hz), with wavelengths <~1/2 of the average head's circumference (i.e. <~9 inches or <~0.23m), auditory localization judgments are based mainly on interaural intensity/level differences (IIDs or ILDs) .

|

For frequencies below 500Hz, IIDs are negligible (why?) and increase gradually with frequency. |

|

|

|

| |

|

|

In Plack, 2005: 176 . |

|

|

|

Interaural Time Difference (ITD)

vs. Interaural Phase Difference (IPD)

|

|

|

|

|

|

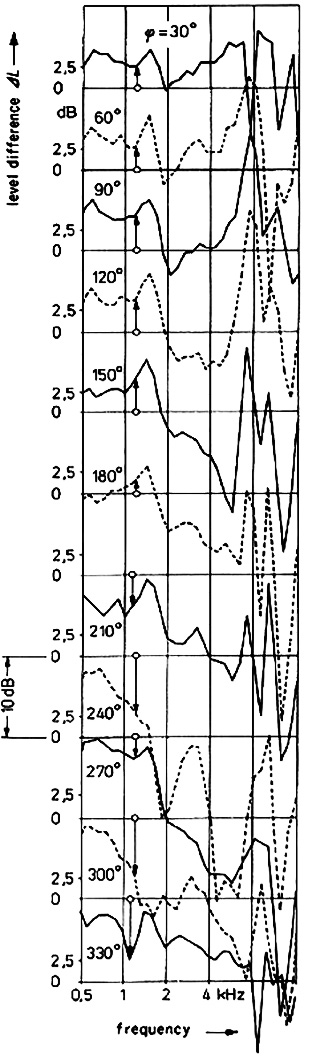

Monaural & Interaural Spectral Differences - HRTF / ATF

For sound

signals of intermediate frequencies (~ 500<f<~1500), IID

and IPD cues do provide some useful

localization information, but only in the azimuth, and only if combined (IID

cues are perceivable down to ~500Hz and IPD cues are perceivable

up to ~770Hz).

| |

Interaural spectral differences

due specifically to structural differences between the pinnae

of the two ears contribute to better lateralization (i.e. more accurate

virtual location of the sound within the head), especially in

the median (i.e. elevation) plane. Pinna-related

spectral filtering does not help us 'construct' a complete aural 'image'

of the outside world. Head Related Transfer Function (HRTF) is defined as the ratio of the sound pressure spectrum measured at the eardrum to the sound pressure spectrum that would exist at the center of the head if the head was removed. The figure to the right displays HRTFs as a function of sound-source elevation angle. From this and other similar datasets it has been inferred that:

|

|

Experimental explorations of HRTFs/ATFs use specially-designed binaural heads (e.g. KEMAR, by G.R.A.S. Sound & Vibration, Denmark) to record signals at various positions in their path to the ear drum and tease out the various anatomical spectral-filtering contributions. |

|

Binaural Cues & "Release from Masking"

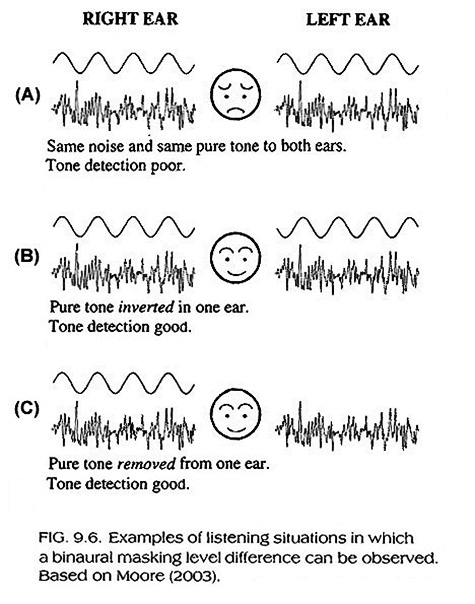

IID and IPD cues also help us perceive tones that would have otherwise been masked. The following three listening examples illustrate this point and correspond to the three scenarios described in the figure to the right (must use headphones).

The release from masking of complex signals is facilitated further by interaural spectral differences. The described release from masking is not due to our ability to localize a signal thanks to the imposed interaural differences. Rather, it is due to signal de-correlation between ears, supported by the interaural changes and supporting the employment of cognitive strategies (e.g. attention focusing) for signal detection in complex sonic environments. |

(in Plack, 2005: 180) |

Cone of Confusion - Head-Movement Localization Cues

|

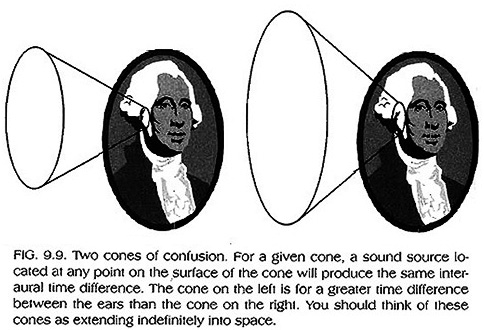

Cone of confusion Sound sources moving in a sagittal plane (i.e. changing in elevation) do not produce IID or IPD changes. Consequently, their movement is difficult to track by purely auditory means. More generally, for any given IID or IPD value, there will be a conical surface extending out of the ear that will produce identical IIDs and IPDs, preventing precise sound source localization over the surface (see to the right).

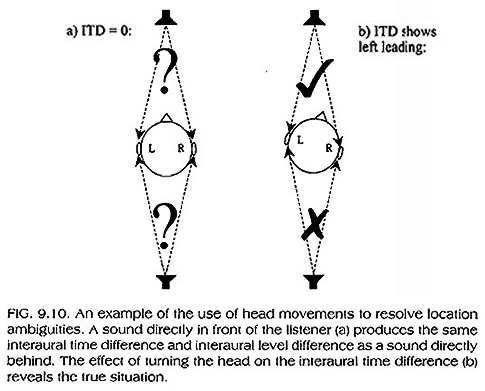

However, the most effective strategy in resolving sound-source localization ambiguities is head movement, assuming the sound signal lasts long enough, unchanged, to allow for such a movement to be of use. Moving the head in the horizontal plane can help resolve front-to-back ambiguities (e.g. below-left), while head tilting can help resolve top-to-bottom ambiguities. |

(in Plack, 2005: 184) |

|

As is the case with most sound-source localization cues, the salience of head-movement cues depends largely upon long-term learning and experience. Auditory localization experiments using headphones and conflicting source and head movements exploit our reliance on previous experience, resulting in revealing illusions. Explore the figures, below, and read the explanations in the captions. | |

(in Plack, 2005: 185) |

|

|

|

|

|

Distance and loudness

Distance and reverberation

Distance and spectral composition (timbre)

|

|

|

|

Precedence effect (Haas effect): The precedence effect describes a learned strategy employed implicitly by listeners in order to address conflicting or ambiguous localization cues occurring in environments where sound wave reflections play an important role (e.g. all rooms other than anechoic environments). The Haas effect, named after German psychoacoustician, Helmut Haas (mid 20th century), is a special case of the precedence effect. It refers to an audio processing technique that uses the precedence effect to create a wider stereo image from a mono source

| |

|

More specifically, for signal arrival times

differences up to 30–40

milliseconds, the perceptual priority of the signal arriving first persists even if the second (delayed) signal is up to 10dB

stronger than the first. The figure to the left illustrates a precedence

effect demonstration with two loudspeakers reproducing the same pulsed wave.

From "How we Localize Sound" by American physicist, W. M. Hartmann; standard reference resource on the topic of sound source localization.

|

|

The figure, below, illustrates the interaction between interaural time & intensity differences, observed in experiments exploring the precedence effect.

[Optional: Brown et. al, 2015. The Precedence Effect in Sound Localization.] | |

|

|

|

|

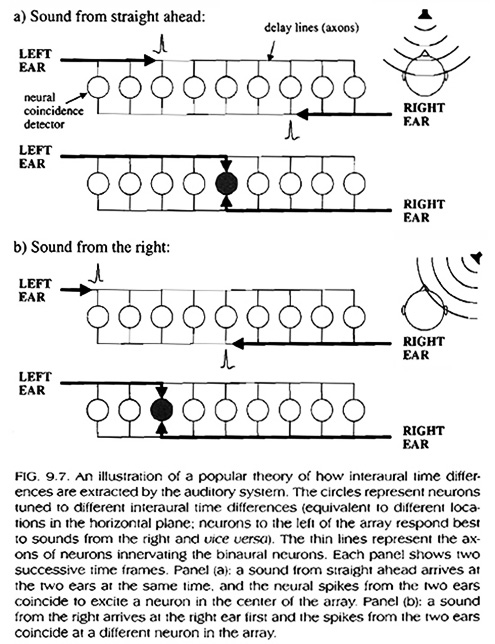

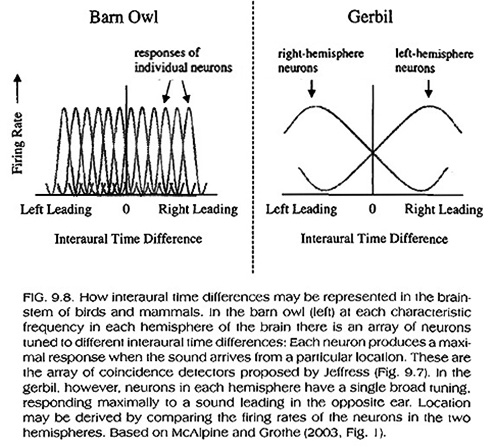

To explain ITD (IPD) detection, America psychologist, Lloyd Jeffress hypothesized the presence of a coincidence detector, at the neural level, that uses delay lines to compare arrival times at each ear (1948). His theory is illustrated in the figure, below left (in Plack, 2005: 182).

|

|

|

Jeffress's model has been partially confirmed by physiological evidence from birds (e.g. barn owl). However, evidence from mammals (e.g. gerbil;

above-right)

suggest broad sensitivity to just two ranges of interaural time

differences at each characteristic frequency (i.e. per

neuron - see the figure, above; in Plack, 2005: 183),

corresponding to the period of that frequency. |

| IID detection can be explained in terms of the associated difference between excitatory and inhibitory activity in the two ears when stimulated by signals that display IIDs. | |

Loyola Marymount University - School of Film & Television